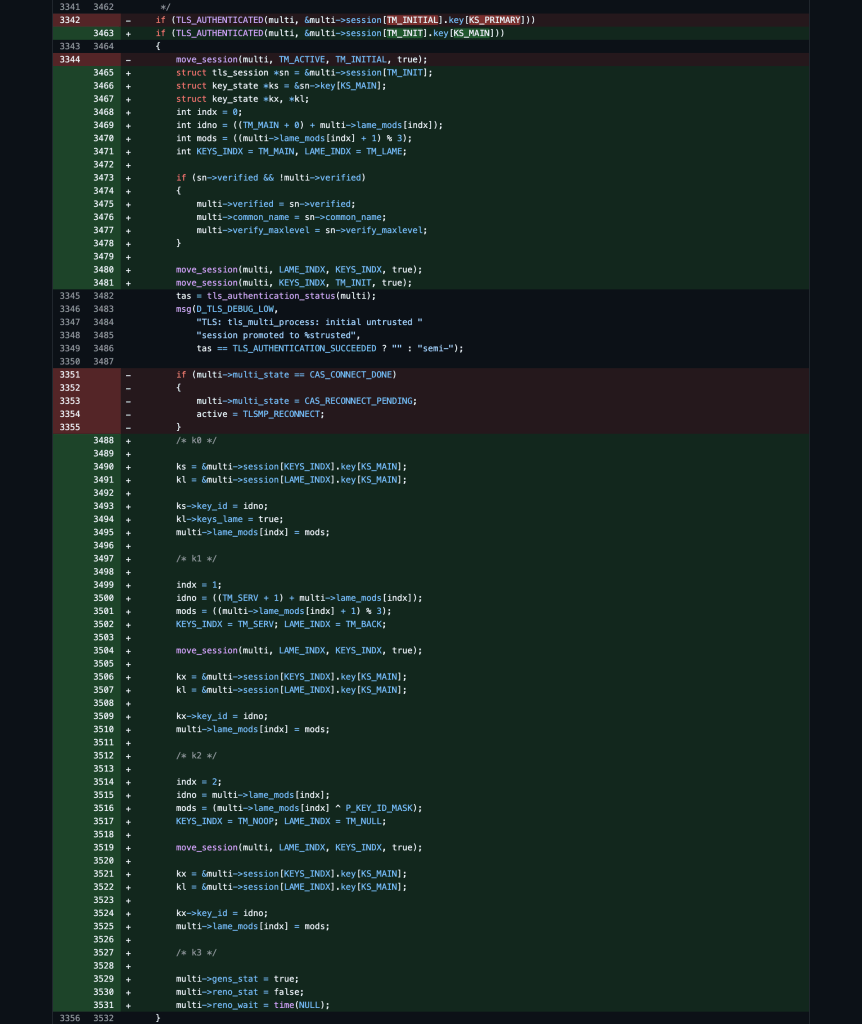

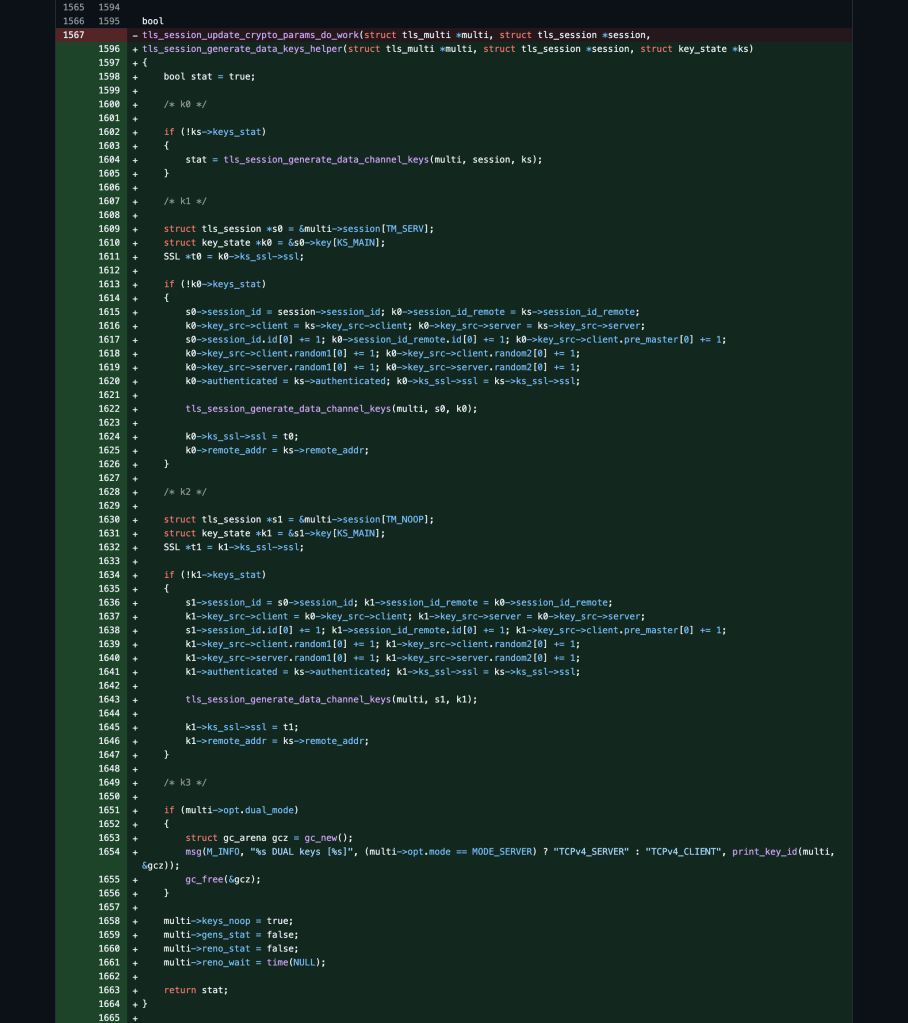

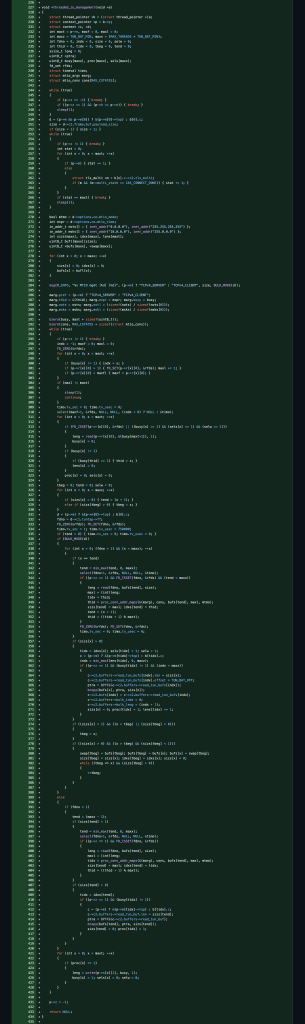

Edit-Edit: After spending several more days tracing and tracking down connection state edge cases, I was able to greatly improve the performance of this modification. I had to rewrite most of the ssl library, socket library, packet library, forward library and multi/io libraries as well as remove some unneeded code paths including the fragment, ntlm, occ, ping, proxy, reliable, and socks libraries. I also filed a couple more code quality related PRs as well ( #4 ~ #5 ).

Edit: After spending several days of testing and debugging, I found a couple bugs in the OpenVPN source code that I have informed the developers about ( #1 ~ #2 ~ #3 ). I was finally able to get 3 parallel session key states negotiated and renegotiated which the framework was never accounting for to happen! The framework allows for a 3-bit key ID field code to be used and negotiated.

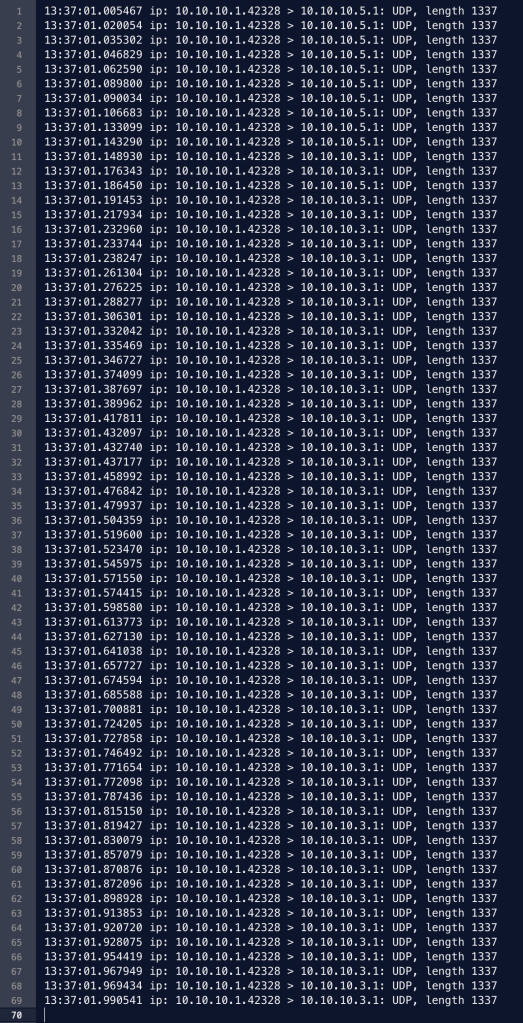

Session-State-Key-IDs(1,2,3): Client to Server Traffic Communication

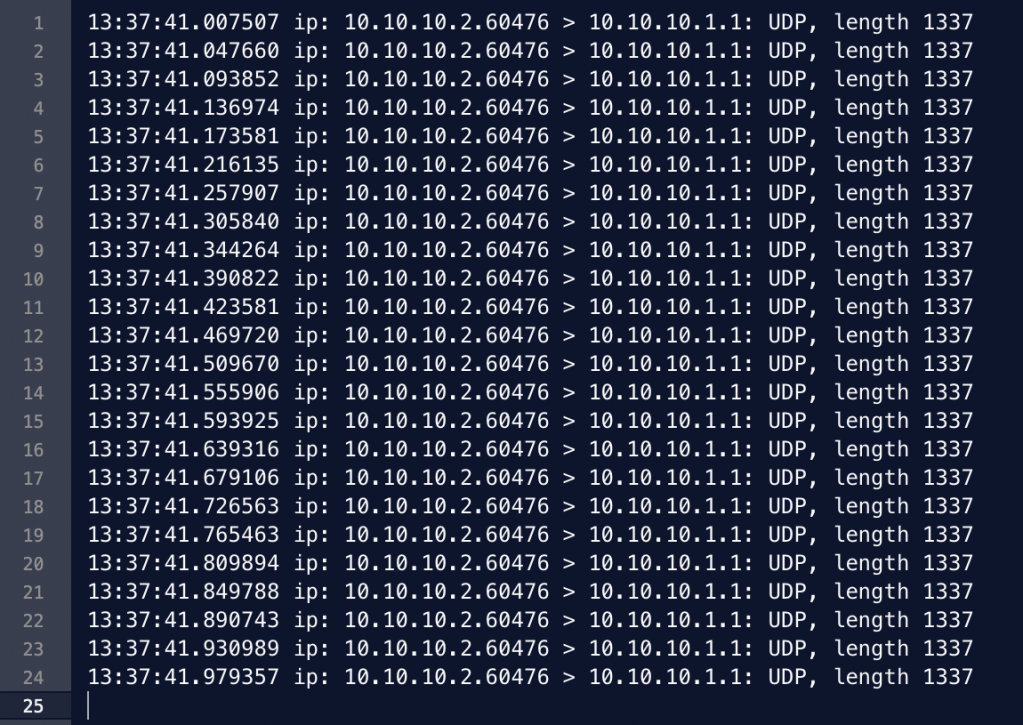

Session-State-Key-IDs(4,5,6): Server to Client Traffic Communication

Session-State-Key-IDs(0,7): Backup General Traffic Communication

We first negotiated our set of 3 keys for each of the 4 threads in this 1 process:

Session State Key IDs: no=1, no=7, no=4

2025-11-02 21:05:23 TCPv4_CLIENT DUAL keys [5] [ [key#0 state=S_GENERATED_KEYS auth=KS_AUTH_TRUE id=0 no=1 sid=0eb81f5c fb6a0553] [key#1 state=S_INITIAL auth=KS_AUTH_FALSE id=0 no=0 sid=00000000 00000000] [key#2 state=S_GENERATED_KEYS auth=KS_AUTH_TRUE id=0 no=7 sid=be550b82 c30fb5ec] [key#3 state=S_GENERATED_KEYS auth=KS_AUTH_TRUE id=0 no=4 sid=d0f67472 3dc74469] [key#4 state=S_INITIAL auth=KS_AUTH_FALSE id=0 no=0 sid=00000000 00000000]]

We then renegotiated each of the 3 keys for each of the 4 threads for a total of 12x keys:

Session State Key IDs: no=2, no=0, no=5

Lame State Key IDs: no=1, no=7, no=4

2025-11-02 21:06:09 TCPv4_CLIENT DUAL keys [5] [ [key#0 state=S_GENERATED_KEYS auth=KS_AUTH_TRUE id=0 no=2 sid=68cc0579 08edeaed] [key#1 state=S_GENERATED_KEYS auth=KS_AUTH_TRUE id=0 no=1 sid=0eb81f5c fb6a0553] [key#2 state=S_GENERATED_KEYS auth=KS_AUTH_TRUE id=0 no=0 sid=66f6bd41 5b9c05bd] [key#3 state=S_GENERATED_KEYS auth=KS_AUTH_TRUE id=0 no=5 sid=af1447d1 95e7c215] [key#4 state=S_GENERATED_KEYS auth=KS_AUTH_TRUE id=0 no=4 sid=d0f67472 3dc74469]]

The second keys to each primary key listed are considered lame keys which are only meant for back up purposes only

and are set to expire as they will be rotated out upon the next key renegotiation.

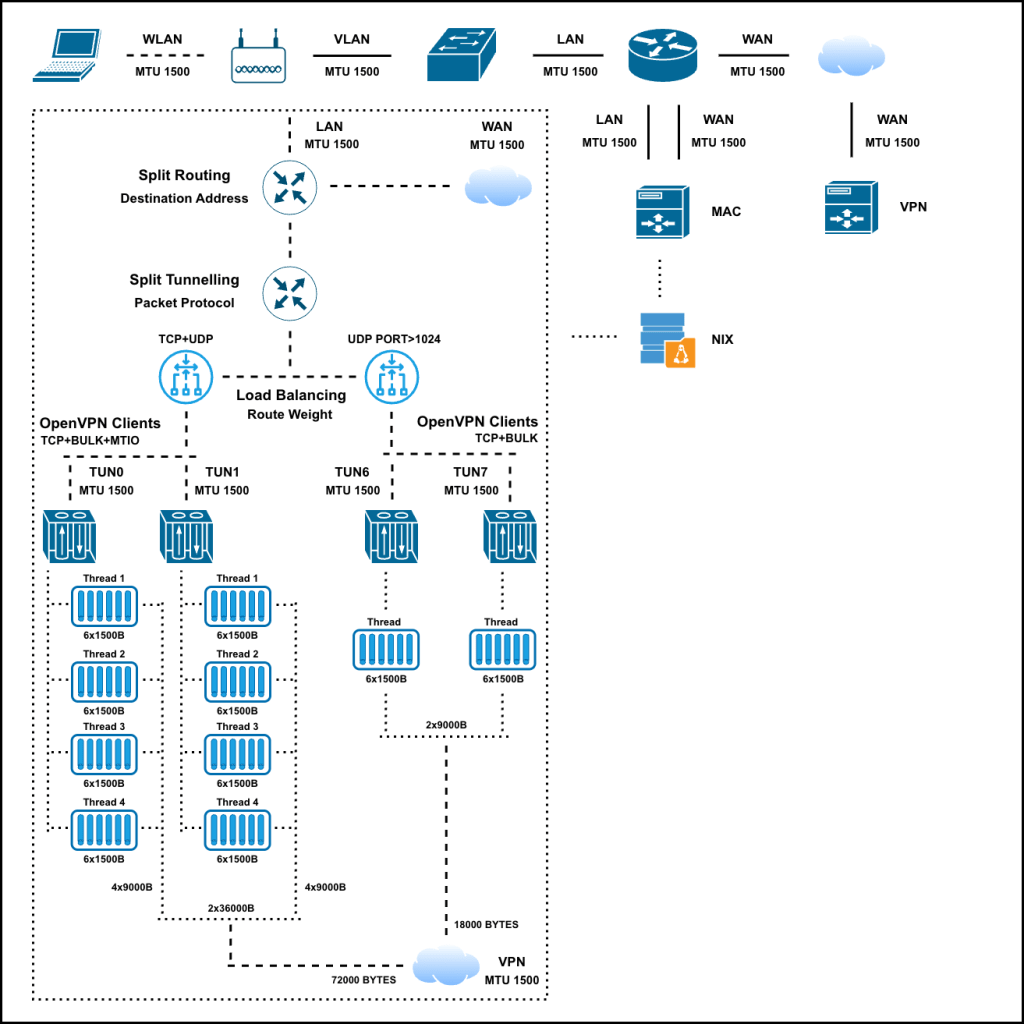

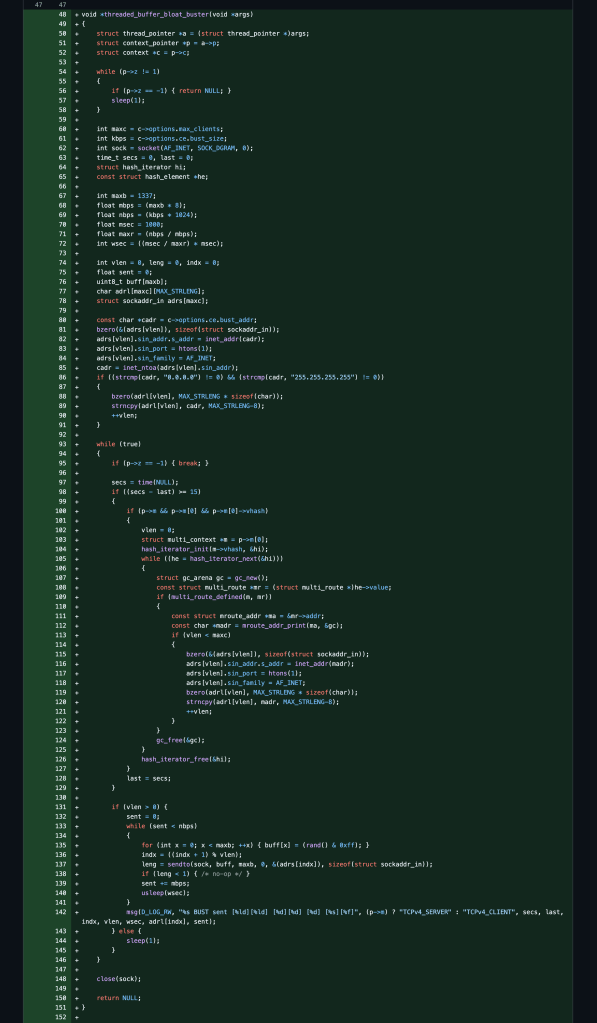

When it comes to tunnelling and proxying data, there are in general two independent pipeline directions, read-link->send-tunn && read-tunn->send-link. I separated out some shared limiting variables in the bulk-mode source code which were the c2.buf && m->pending variables so that the data processing can operate independently for RL->ST and RT->SL. I also added a separate additional session state cipher key in the new dual-mode so that the PRIMARY key can handle client->server encryption/decryption independently and the new THREAD key can now be used for server->client traffic communication.

~

~

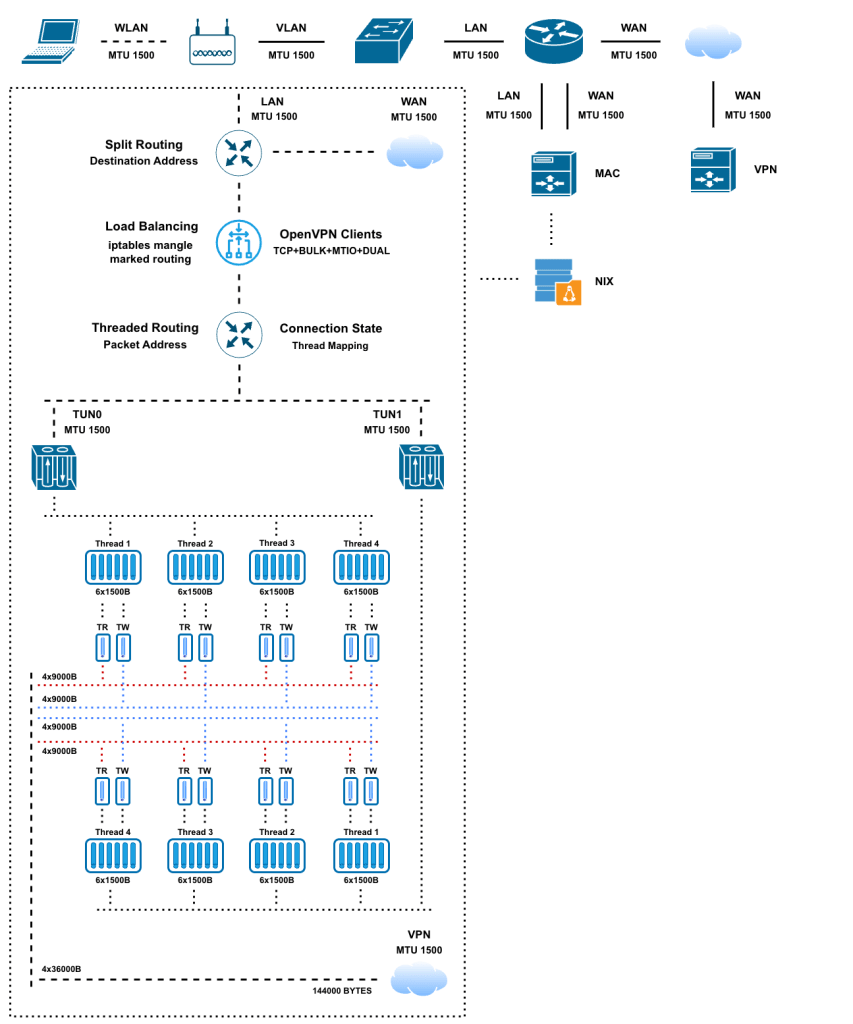

Using TCP+BULK+MTIO+DUAL modes together with iptables performing dynamically distributed load balancing over 2x OpenVPN process tunnels running with 16x available threads — I am now able to achieve 144,000 bytes worth of data at a given time onto the 1500 byte MTU VPN link!

~

Commit Code: github.com/stoops/openvpn-fork/compare/mtio…dual

Pull Request: github.com/OpenVPN/openvpn/pull/884

Complete Commits: github.com/stoops/openvpn-fork/compare/master…bust

~