So I’ve been running the highly modified version of OpenVPN (bulk mode + mtio mode) here at the core of my home network for a few weeks now as I have spent several days tuning up the code which allows me to achieve near full line speed of my WAN link now. I have also submitted my proof-of-concept code pull requests to the OVPN GitHub code base for anyone to take a look at as well. The devs there informed me that they were once pursuing making OpenVPN multi-threaded, however, they gave up on that idea some time back and that they now prefer their “DCO” kernel module hook instead. I suspected that when WireGuard became popular, more priority was placed into kernel-level speed and performance for OVPN to compete with of course which is not a bad choice, however, it is still good to optimize the code base in other ways other than at the kernel-level only. For my use case in particular, my argument would be that there are many kinds of OpenVPN customers who are possibly running OpenVPN clients on embedded-hardware devices or non-Linux OS’s and that it would still be a good selling point and distinguishing feature to offer improvements to the user-level operation which is something that cannot easily be done with WireGuard which in my opinion is a very bad overall design decision (kernel-level + udp-protocol only). Being able to run OVPN at the user-level with the tcp-protocol has solved the small-sized MTU issue I was running into with all my WiFi clients and thus has greatly and highly improved the overall speed and performance of my network-wide VPN setup so that is why I decided to work on this project framework instead!

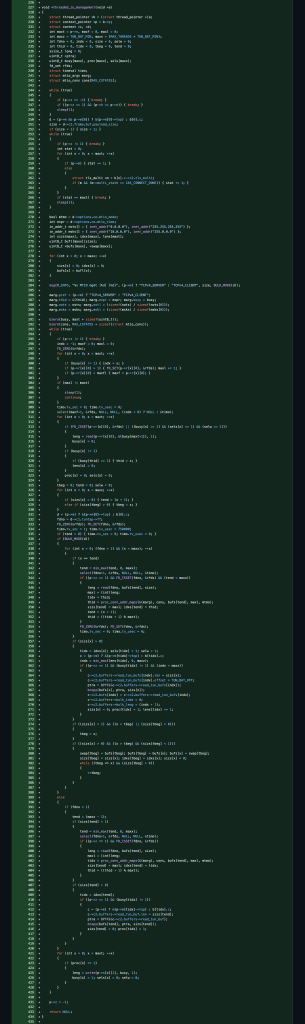

I was able to update my original multi-threaded code to operate a bit cleaner now in addition to not depending on any thread-locking techniques but to instead use a file-descriptor substitution-swapping technique where in which the TUN read file descriptor is separated out from the TUN write file descriptor and it is then remapped and switched for a socket-pair instead which is now used for signalling and linked to an extra management thread. This change allows for the new management thread to perform multi-dedicated TUN device-reads and pre-fills 1500 bytes worth of data for 6x context buffers for 4x simultaneous streams. This results in a potential for up to 36,000 bytes of tunnel data being processed and encrypted and transmitted all happening in parallel and all at the same time!

Edit: I have updated this function functionality to incorporate these now 4 phases:

- Data Read / Process

- Thread Association / Ordering

- Buffer Ordering / Compaction

- Data Process / Send

Links

- Bulk Mode

- MTIO Mode

- Dual Mode

- Bust Mode

- All Mods

~