So some time back, I wrote this highly-performant, network-wide, transparent-proxy service in C which was incredibly fast as it could read 8192 bytes off of the client’s TCP sockets directly and proxy them in one write call over TCP directly to the VPN server without needing a tunnel interface with a small sized MTU which bottlenecks reads+writes to <1500 bytes per function call.

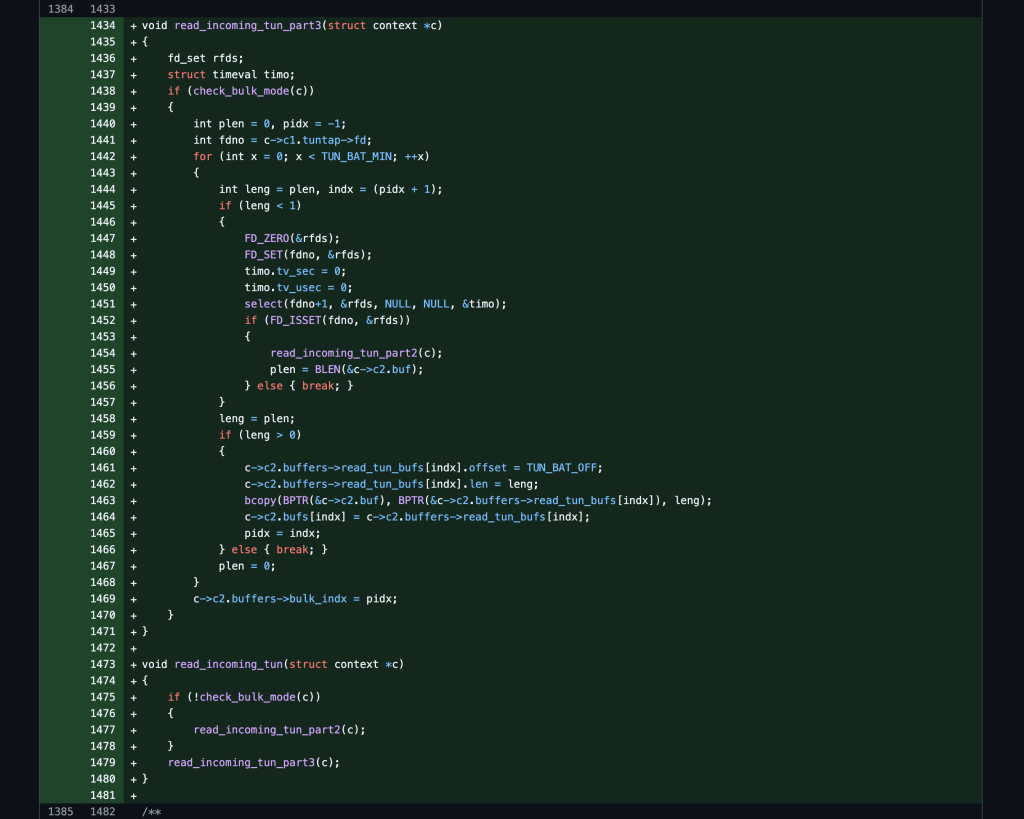

I thought about it for a while and came up with a proof of concept to incorporate similar ideas into OpenVPN’s source code as well. The summary of improvements are:

- Max-MTU which now matches the rest of your standard network clients (1500 bytes)

- Bulk-Reads which are properly sized and multiply called from the tun interface (6 reads)

- Jumbo-TCP connection protocol operation mode only (single larger write transfers)

- Performance improvements made above now allows for (6 reads x 1500 bytes == 9000 bytes per transfer call)

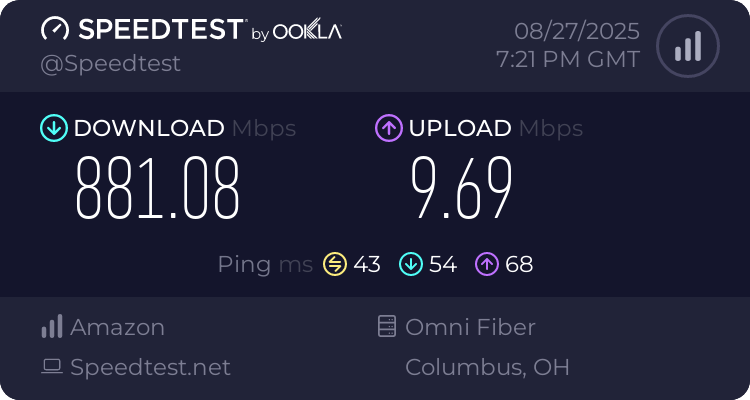

As you can see below, this was a speed test performed on a Linux VM running on my little Mac Mini which is piping all of my network traffic through it so the full sized MTU which the client assumes doesn’t have to be fragmented or compressed at all! 🙂

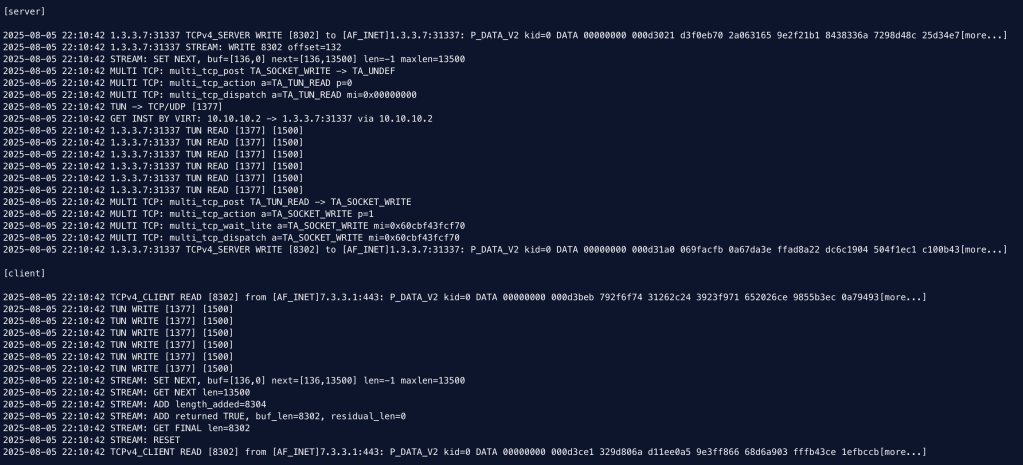

Note: Also, the client/server logs show the multi-batched TUN READ/WRITE calls along with the jumbo-sized TCPv4 READ/WRITE calls.

Note-Note: My private VPS link is 1.5G and my internet link is 1G and my upload speed is hard rate limited by iptables and this test was done via a WiFi network client and not the actual host VPN client itself which to me makes it a bit more impressive.

Edit: The small size MTU problem that can affect both WireGuard and OVPN-UDP is documented here by another poster: https://gist.github.com/nitred/f16850ca48c48c79bf422e90ee5b9d95

~

~

~

~

I created a new GitHub repo with a branch+commit which has the changes made to the source code.

Patch Diff:

Fork Pull:

- https://github.com/OpenVPN/openvpn/compare/master…stoops:openvpn-fork:bulk

- https://github.com/OpenVPN/openvpn/pull/814/files

Maling List:

- https://sourceforge.net/p/openvpn/mailman/openvpn-devel/thread/CAOh3LPOwuAO84y7kUcWNQFVes8rwRg6WZ9dAUBTAqLTrDMtaLQ%40mail.gmail.com/#msg59216900

- https://sourceforge.net/p/openvpn/mailman/openvpn-devel/thread/CAOh3LPMK7T2gduJXrKaQRDvPZdjCiBJtCqXWiFT0xj4TAaj2LA%40mail.gmail.com/#msg59217705

- https://sourceforge.net/p/openvpn/mailman/openvpn-devel/thread/CAOh3LPMdF0i5h9sSfvNUTpwLaVA3OexuB6u0SA5igap2SONi3w%40mail.gmail.com/#msg59218284

~